Upload Model

-

upload_model(self, model_name: str, task: netspresso.compressor.client.utils.enum.Task, framework: netspresso.compressor.client.utils.enum.Framework, file_path: str, input_shapes: List[Dict[str, int]] = []) → netspresso.compressor.core.model.Model Upload a model for compression.

- Parameters

- Raises

e – If an error occurs while uploading the model.

- Returns

Uploaded model object.

- Return type

Model

Details of Parameters

Task

-

class

Task[source] An enumeration.

Available Task

Name |

Description |

|---|---|

IMAGE_CLASSIFICATION |

Image Classification |

OBJECT_DETECTION |

Object Detection |

IMAGE_SEGMENTATION |

Image Segmentation |

SEMANTIC_SEGMENTATION |

Semantic Segmentation |

INSTANCE_SEGMENTATION |

Instance Segmentation |

PANOPTIC_SEGMENTATION |

Panoptic Segmentation |

OTHER |

Other |

Example

from netspresso.compressor import Task

TASK = Task.IMAGE_CLASSIFICATION

Framework

-

class

Framework[source] An enumeration.

Available Framework

Name |

Description |

|---|---|

TENSORFLOW_KERAS |

TensorFlow-Keras |

PYTORCH |

PyTorch GraphModule |

ONNX |

ONNX |

Example

from netspresso.compressor import Framework

FRAMEWORK = Framework.TENSORFLOW_KERAS

Note

- ONNX (.onnx)

Supported version: Pytorch >= 1.11.x, ONNX >= 1.10.x.

If a model is defined in Pytorch, it should be converted into the ONNX format before being uploaded.

- PyTorch GraphModule (.pt)

Supported version: Pytorch >= 1.11.x.

If a model is defined in Pytorch, it should be converted into the GraphModule before being uploaded.

The model must contain not only the status dictionary but also the structure of the model (do not use state_dict).

How-to-guide for the conversion of PyTorch into GraphModule.

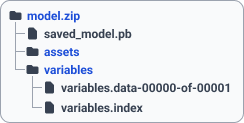

- TensorFlow-Keras (.h5, .zip)

Supported version: TensorFlow 2.3.x ~ 2.8.x.

Custom layer must not be included in Keras H5 (.h5) format.

The model must contain not only weights but also the structure of the model (do not use save_weights).

If there is a custom layer in the model, please upload TensorFlow SavedModel format (.zip).

Input Shapes

Note

For input shapes, use the same values that you used to train the model.

If the input shapes of the model is dynamic, input shapes is required.

If the input shapes of the model is static, input shapes is not required.

For example, batch=1, channel=3, height=768, width=1024.

input_shapes = [{"batch": 1, "channel": 3, "dimension": [768, 1024]}]

Currently, only single input models are supported.

Details of Returns

-

class

Model(model_id: str, model_name: str, task: str, framework: str, model_size: float, flops: float, trainable_parameters: float, non_trainable_parameters: float, number_of_layers: int, input_shapes: List[netspresso.compressor.core.model.InputShape] = <factory>)[source] Represents a uploaded model.

-

model_id The ID of the model.

- Type

str

-

model_name The name of the model.

- Type

str

-

input_shapes The input shapes of the model.

- InputShape Attributes:

batch (int): The batch size of the input tensor.

channel (int): The number of channels in the input tensor.

dimension (List[int]): The dimensions of the input tensor.

- Type

List[InputShape]

-

model_size The size of the model.

- Type

float

-

flops The FLOPs (floating point operations) of the model.

- Type

float

-

trainable_parameters The number of trainable parameters in the model.

- Type

float

-

non_trainable_parameters The number of non-trainable parameters in the model.

- Type

float

-

number_of_layers The number of layers in the model.

- Type

float

-

Example

from netspresso.compressor import ModelCompressor, Task, Framework

compressor = ModelCompressor(email="YOUR_EMAIL", password="YOUR_PASSWORD")

model = compressor.upload_model(

model_name="YOUR_MODEL_NAME",

task=Task.IMAGE_CLASSIFICATION,

framework=Framework.TENSORFLOW_KERAS,

file_path="YOUR_MODEL_PATH", # ex) ./model.h5

input_shapes="YOUR_MODEL_INPUT_SHAPES", # ex) [{"batch": 1, "channel": 3, "dimension": [32, 32]}]

)

Output

>>> model

Model(

model_id="5eeb0edb-57d2-4a20-adf4-a6c05516015d",

model_name="YOUR_MODEL_NAME",

task="image_classification",

framework="tensorflow_keras",

input_shapes=[InputShape(batch=1, channel=3, dimension=[32, 32])],

model_size=12.9641,

flops=92.8979,

trainable_parameters=3.3095,

non_trainable_parameters=0.0219,

number_of_layers=0,

)